A2C Optuna

Advantage Actor-Critic with Optuna

Introduction

The performance of online reinforcement algorithms such as Advantage Actor-Critic (A2C) is very sensitive to hyperparameters. To automate and streamline the training of an optimal policy, this project utilizes Optuna, a hyperparameter search framework. We successfully find the set of parameters that solve the HalfCheetah MuJoCo Gym environment.

Method

The policy’s input is the 17-dimensional continuous state of the environment. It’s trained to predict 6-dimensional action output. The training consists of training two networks: actor and critic. The actor learns to map states to actions, and the critic learns to predict the expected return from a given state. We use Generalized Advantage Estimation (GAE) for advantage calculation, with normalized advantages.

Optuna uses the Bayesian optimization algorithm to search for the best set of hyperparameters. It selects a set of hyperparameter values from the pool of available values, which forms a trial. It then prunes and finds the best trial based on the average evaluation return. We search among the following hyperparameters: model size, discount, learning rate, generalized advantage estimate lambda, temporal difference lambda, baseline gradient steps, and batch size.

Results

We compare the performance of our best model trained by Optuna for the HalfCheetah-v4 environment with the stable-baseline3 A2C policy, the only policy available online for the same environment. The sb3-a2c policy can be found here.

| Policy | Average Return |

|---|---|

| Ours | 3828.84 |

| sb3-a2c | 3096.61 |

Our model performs 24% better than the sb3-a2c. Below are the policies’ trajectory rollouts.

From the video above, we can see that our agent achieves higher speed and runs in a more stable way compared to the sb3-a2c policy.

Below is the set of hyperparameter values found by Optuna to train our policy.

| Policy | discount | learning rate | gae lambda | td lambda | baseline grad steps | batch size | normalize advantage | reward to go | num layers | layer size layers |

|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 0.99 | 1.0e-3 | 1.0 | 1.0 | 50 | 25,000 | true | true | 3 | 256 |

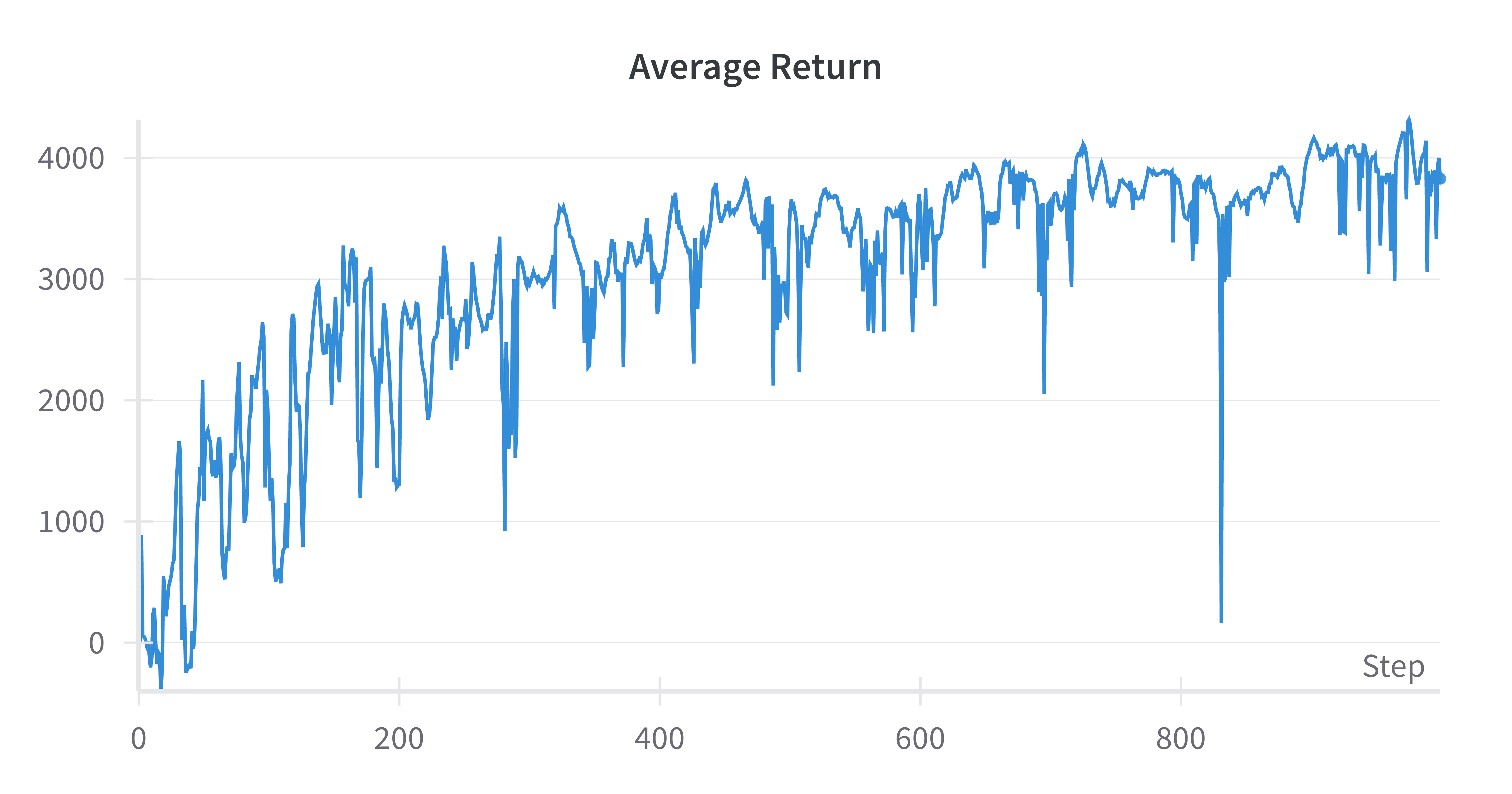

And here is the curve for the average evaluation return of our policy during training.

Conclusion

This project demonstrates the efficacy of automated hyperparameter optimization using Optuna in enhancing the performance of reinforcement learning algorithms. By fine-tuning key parameters of the Advantage Actor-Critic (A2C) algorithm, we achieved a 24% improvement in average return compared to the stable-baseline3 A2C policy on the HalfCheetah MuJoCo Gym environment.

Our approach leverages Bayesian optimization to systematically explore the hyperparameter space, resulting in a robust policy that outperforms the baseline both quantitatively and qualitatively, as evident from the higher stability and speed observed in trajectory rollouts. This highlights the potential of automated hyperparameter search frameworks like Optuna to streamline the development of efficient reinforcement learning models for complex tasks.

Citation

@article{savva2024a2coptuna,

title={Advantage Actor-Critic with Optuna},

author={Georgy Savva},

year={2024},

url={https://georgysavva.github.io/projects/a2c-optuna/},

}